- 1.背景

- 2.机器人模型

- cerberus_anymal_b_sensor_config_1

- cerberus_anymal_c_sensor_config_1

- cerberus_gagarin_sensor_config_1

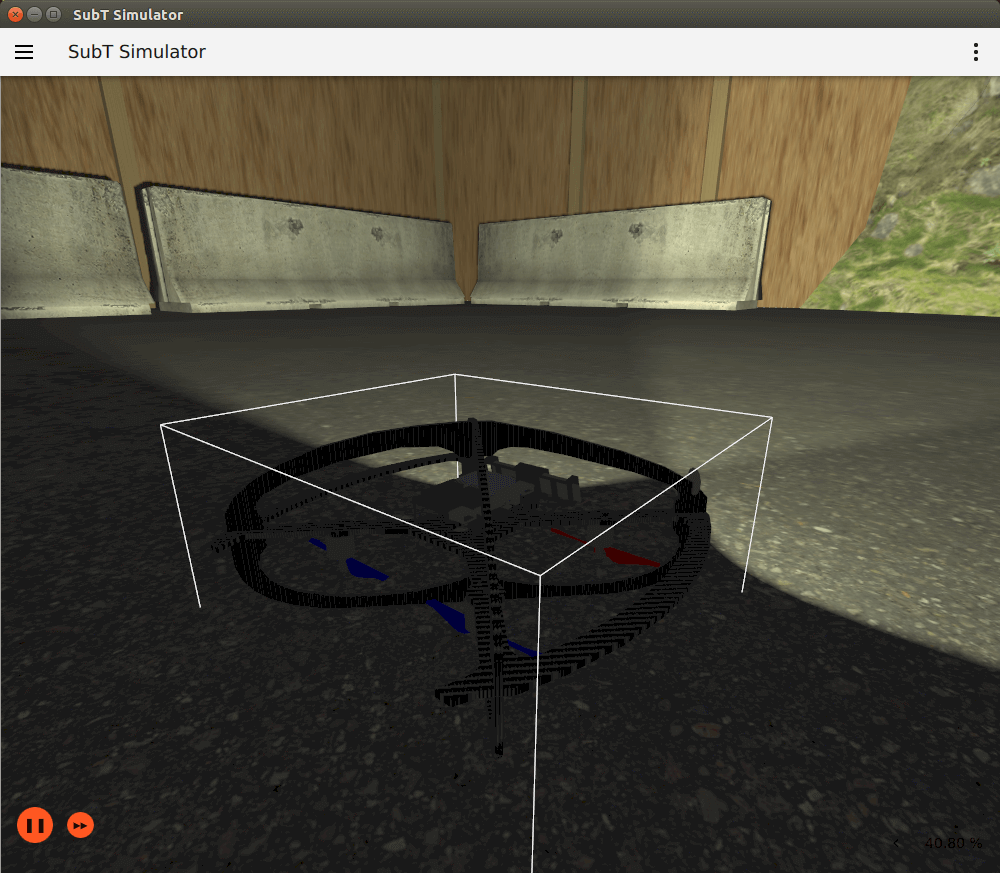

- cerberus_m100_sensor_config_1

- cerberus_rmf_sensor_config_1

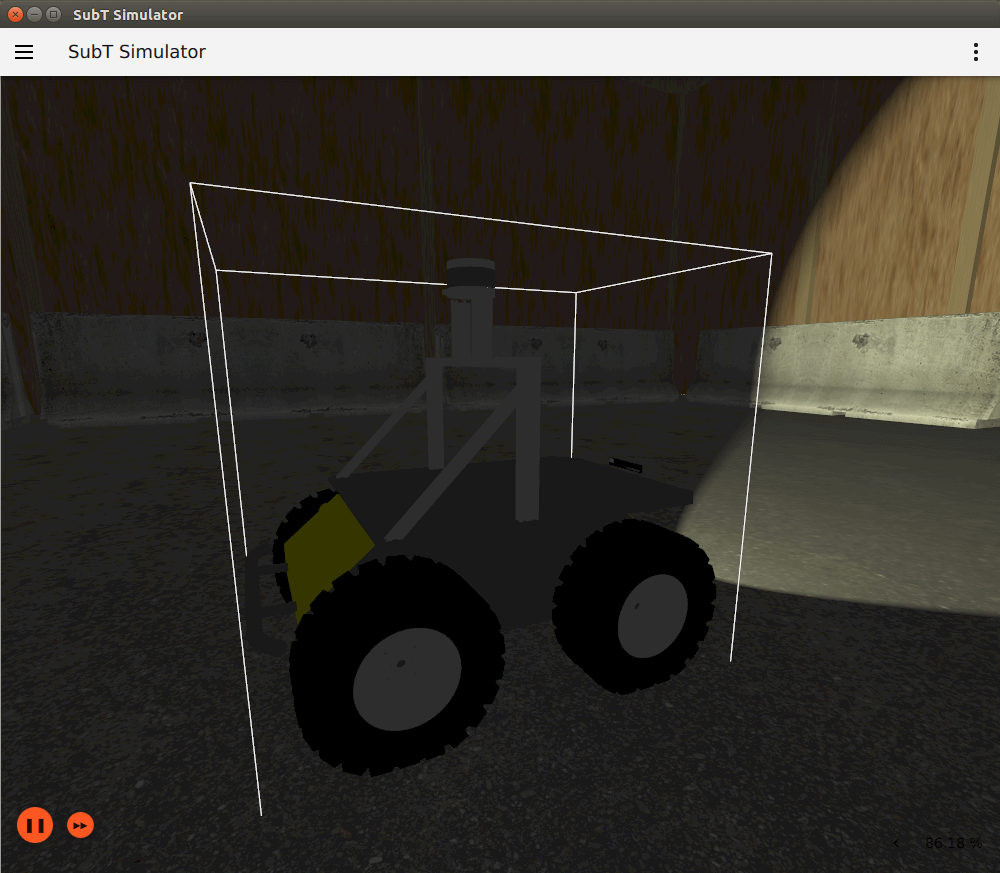

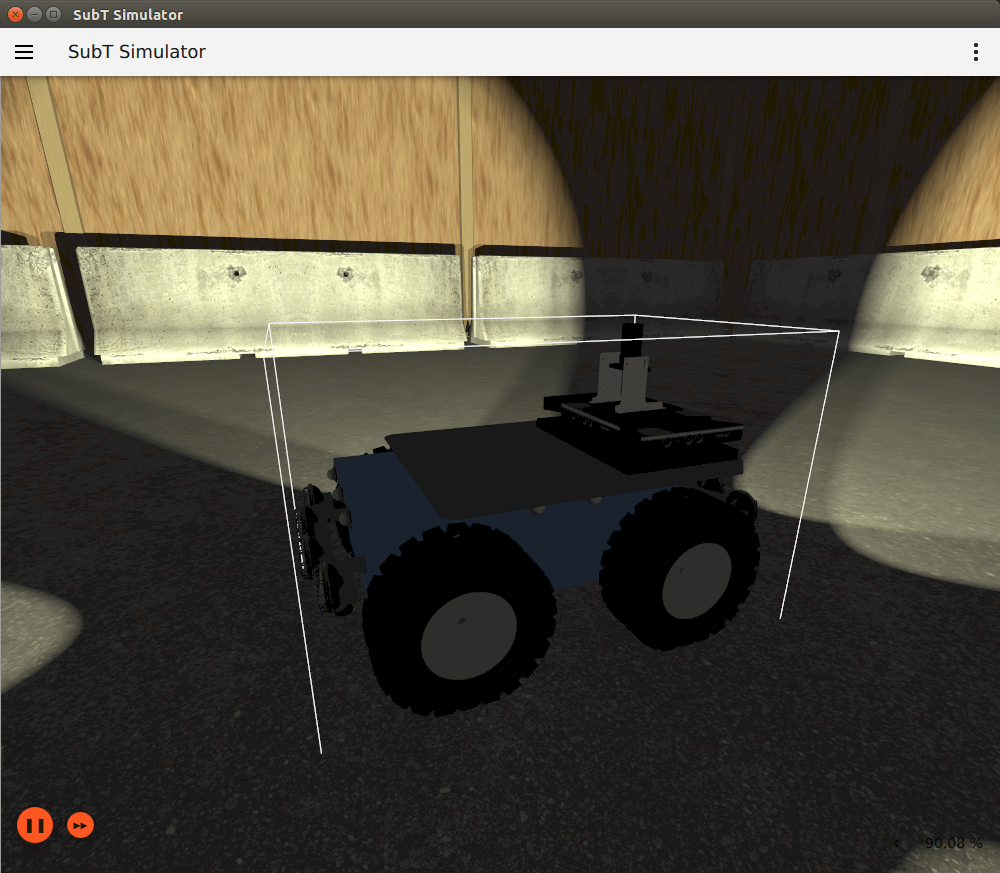

- costar_husky_sensor_config_1

- costar_shafter_sensor_config_1

- csiro_data61_dtr_sensor_config_1

- csiro_data61_ozbot_atr_sensor_config_1

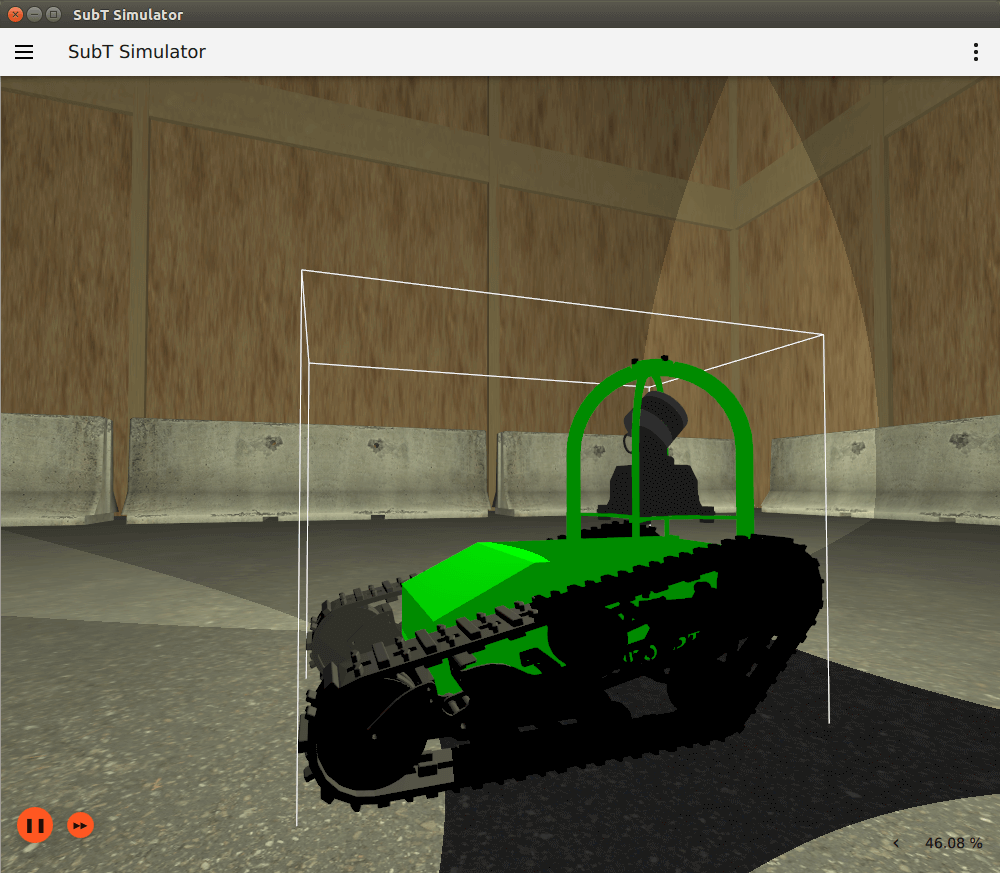

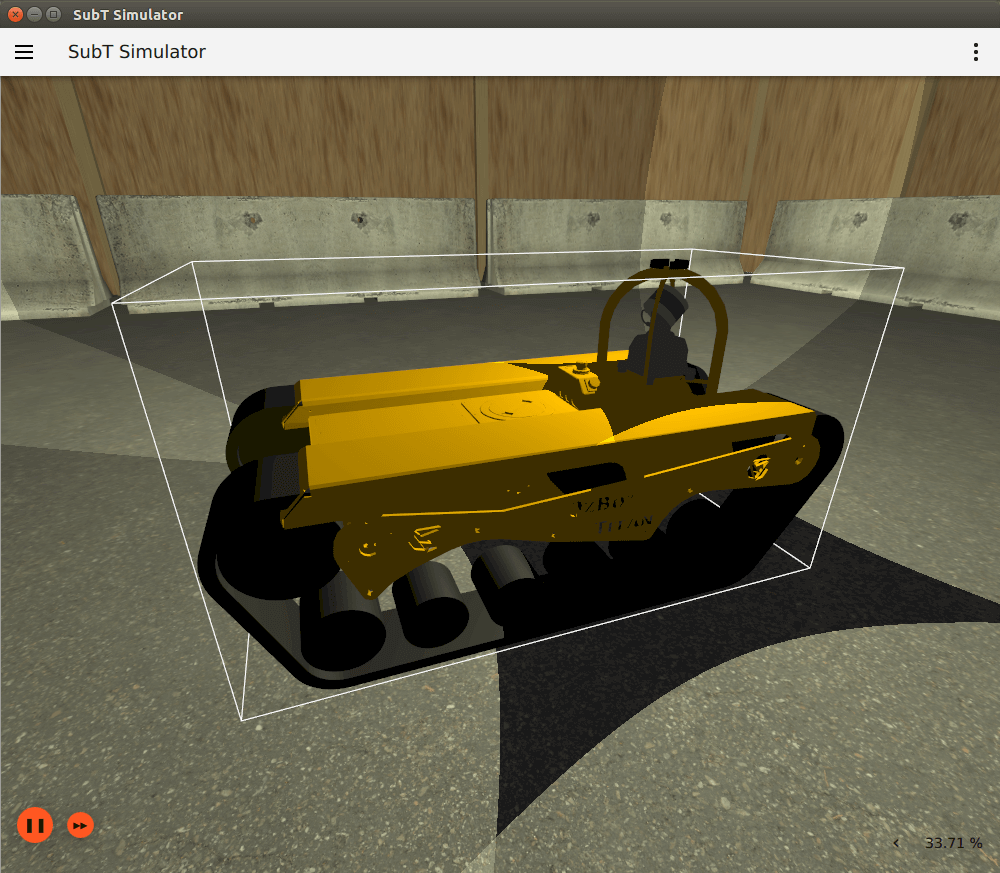

- ctu_cras_norlab_absolem_sensor_config_1

- explorer_ds1_sensor_config_1

- explorer_r2_sensor_config_1

- explorer_r3_sensor_config_1

- explorer_x1_sensor_config_1

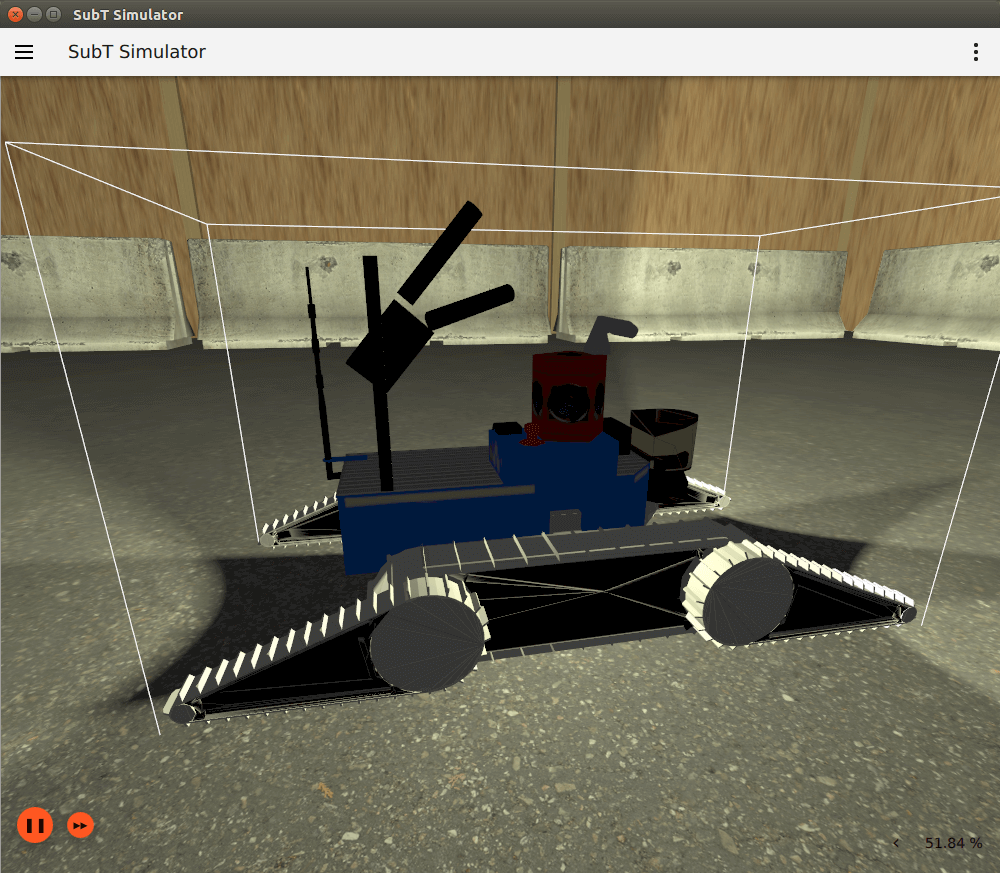

- marble_hd2_sensor_config_1

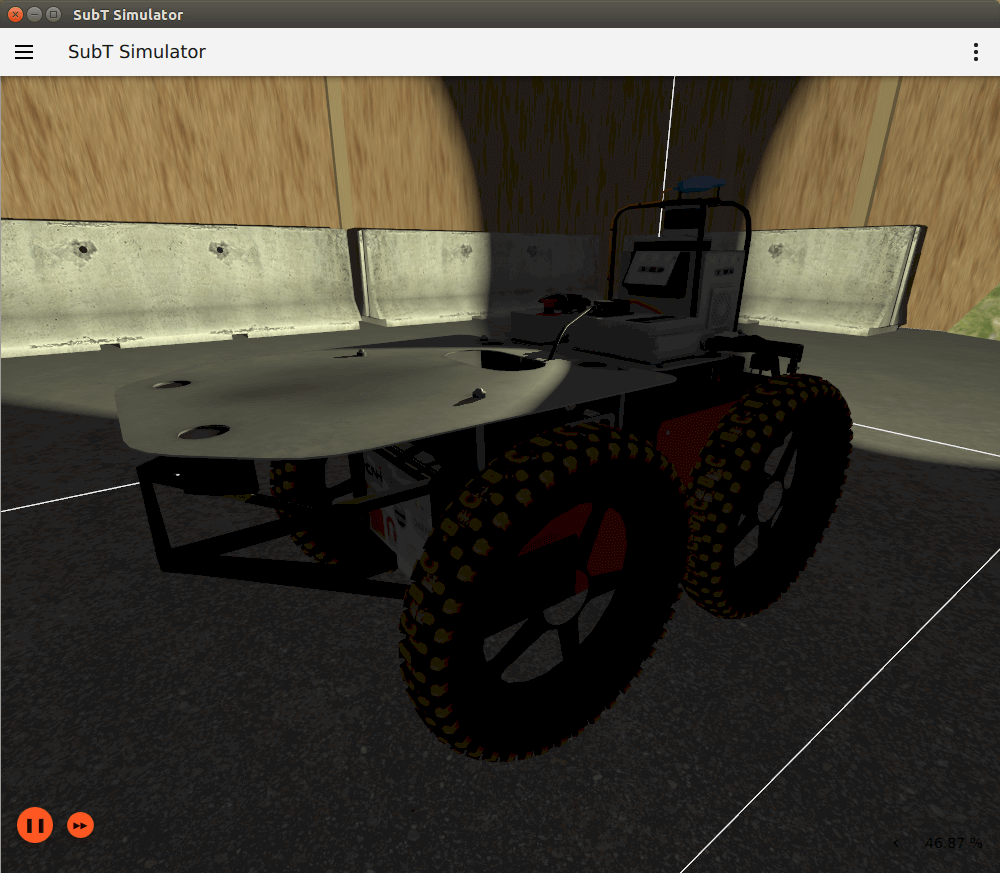

- marble_husky_sensor_config_1

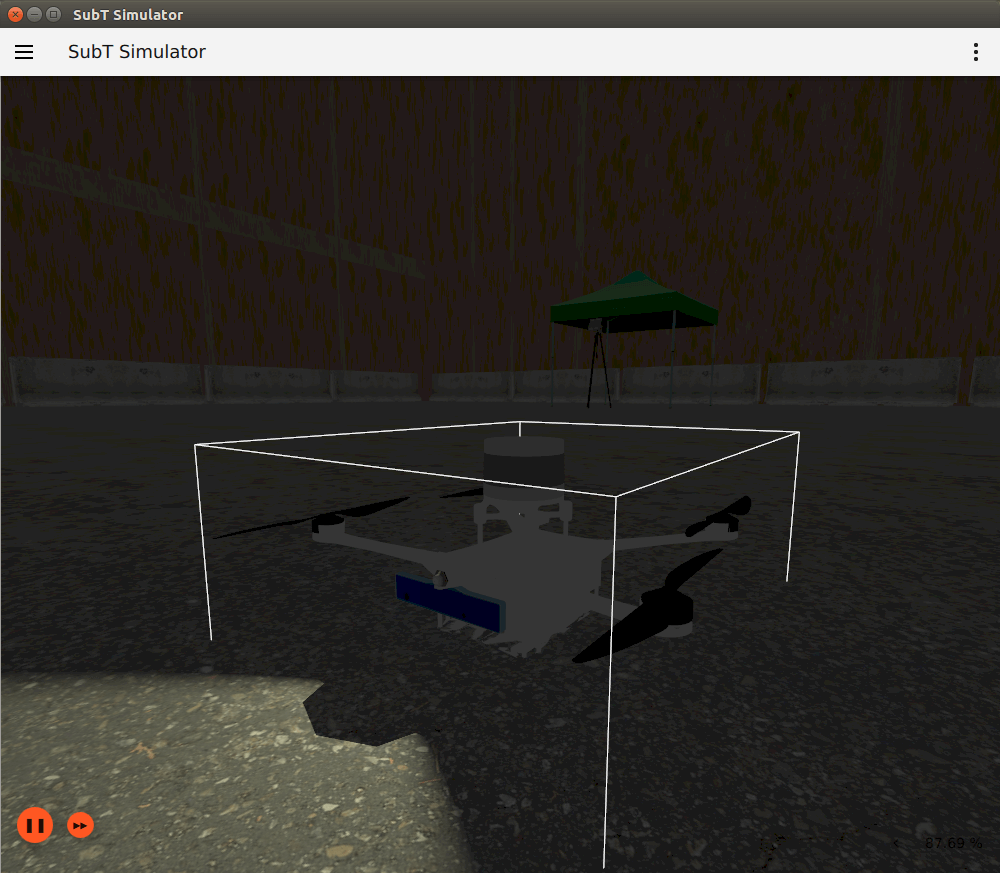

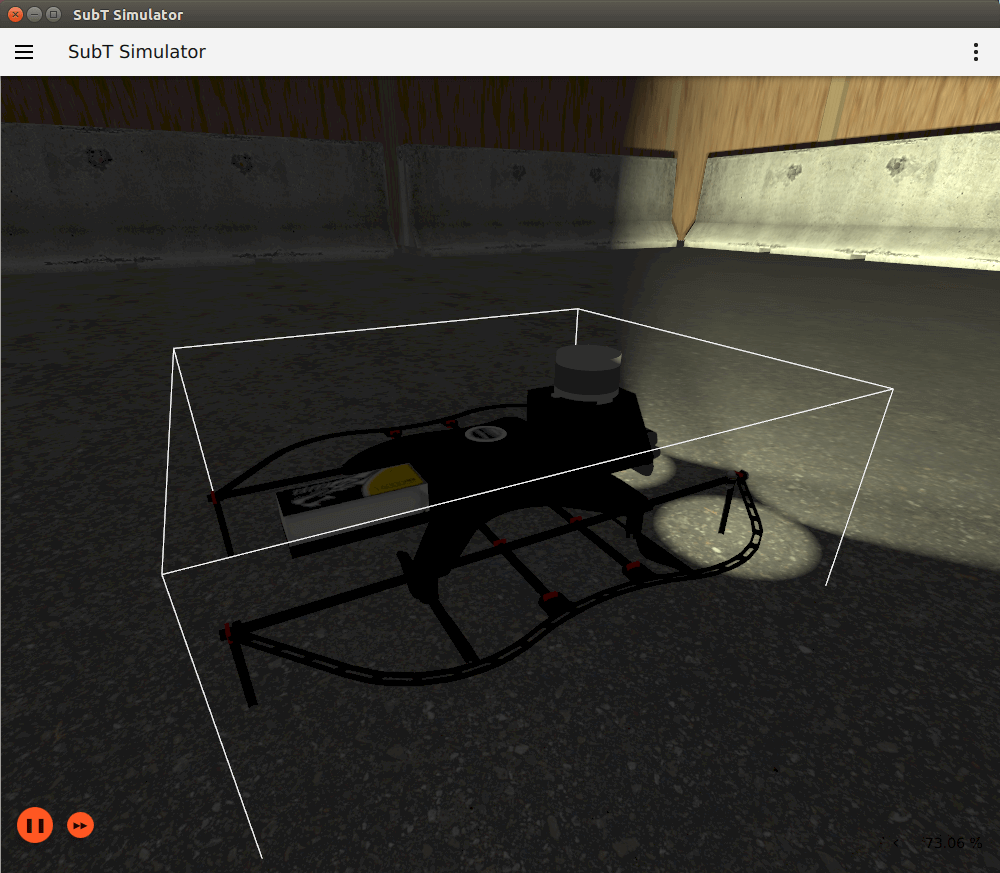

- marble_qav500_sensor_config_1

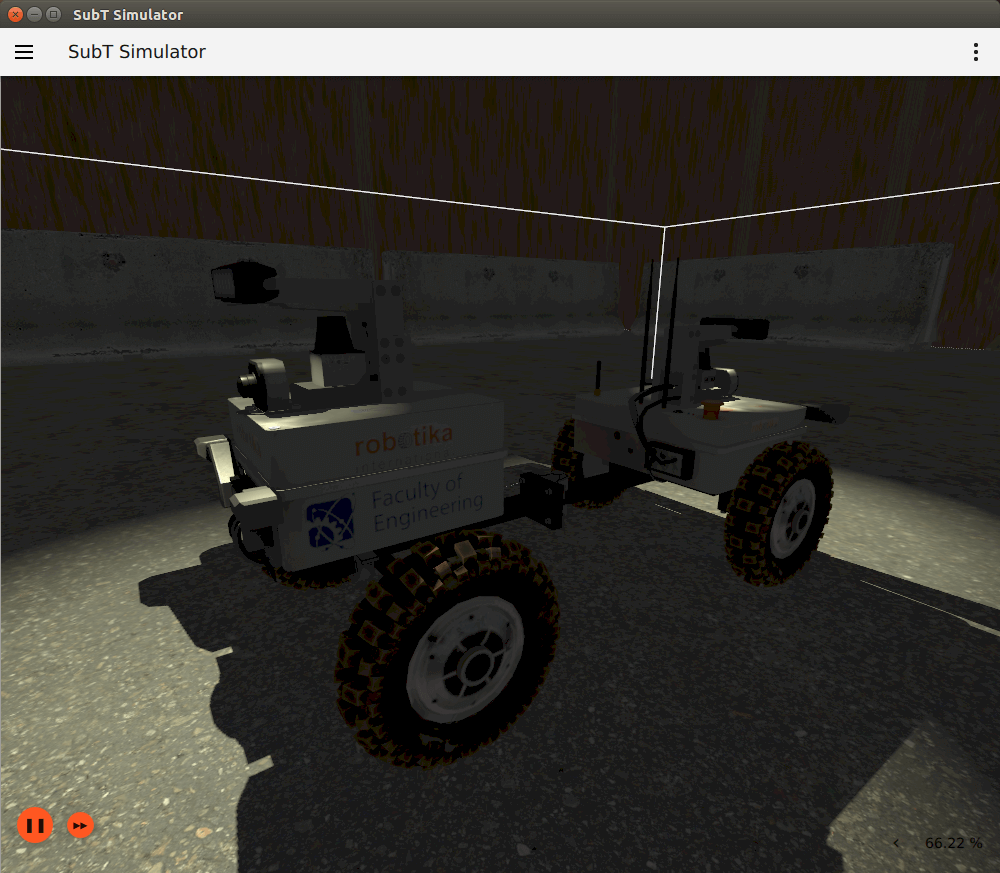

- robotika_freyja_sensor_config_1

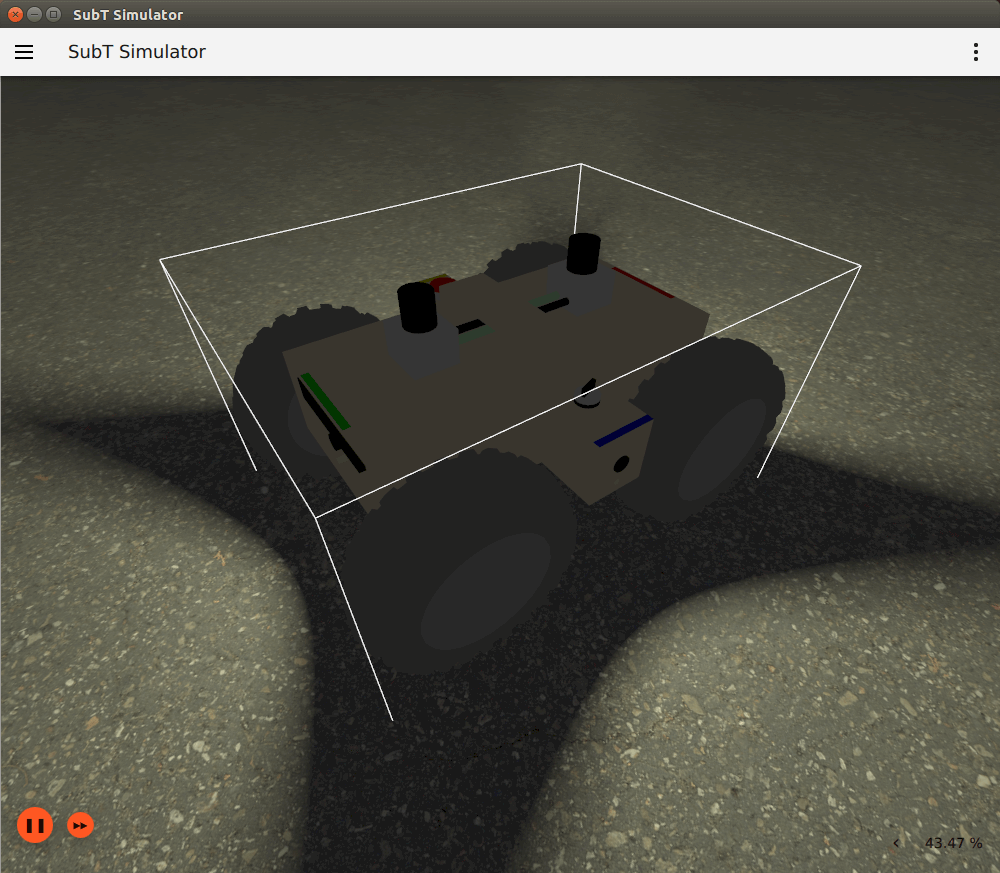

- robotika_kloubak_sensor_config_1

- robotika_x2_sensor_config_1

- sophisticated_engineering_x2_sensor_config_1

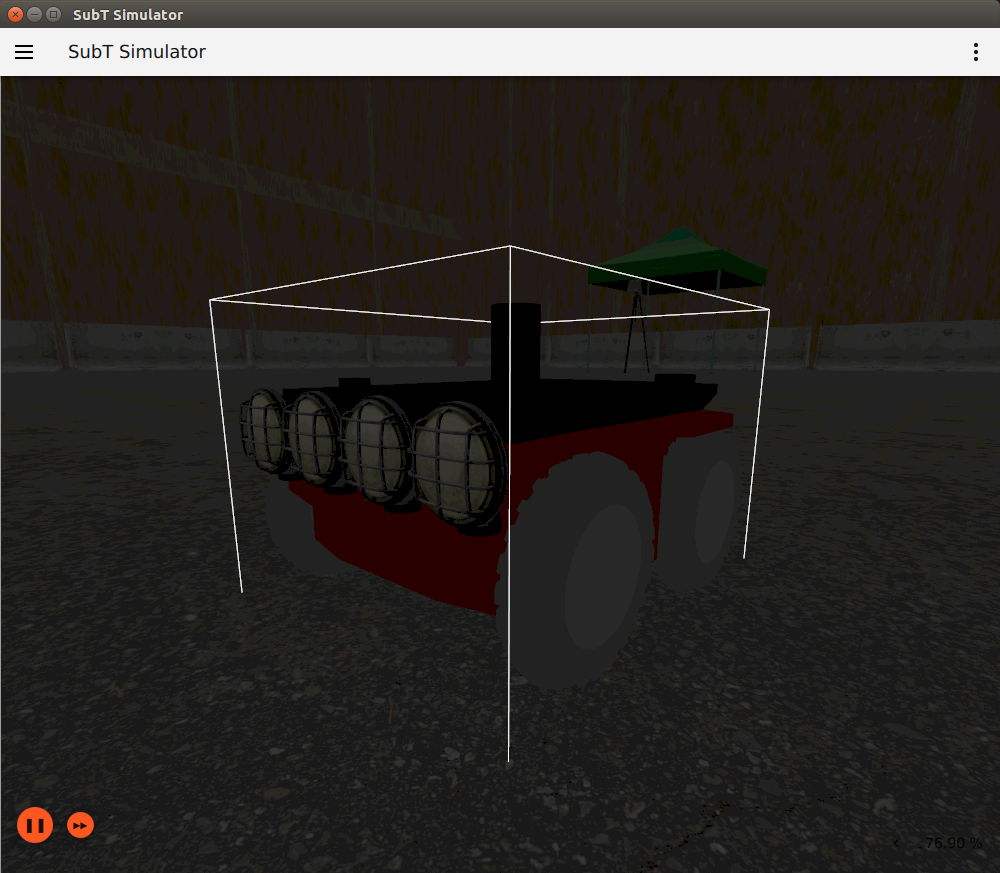

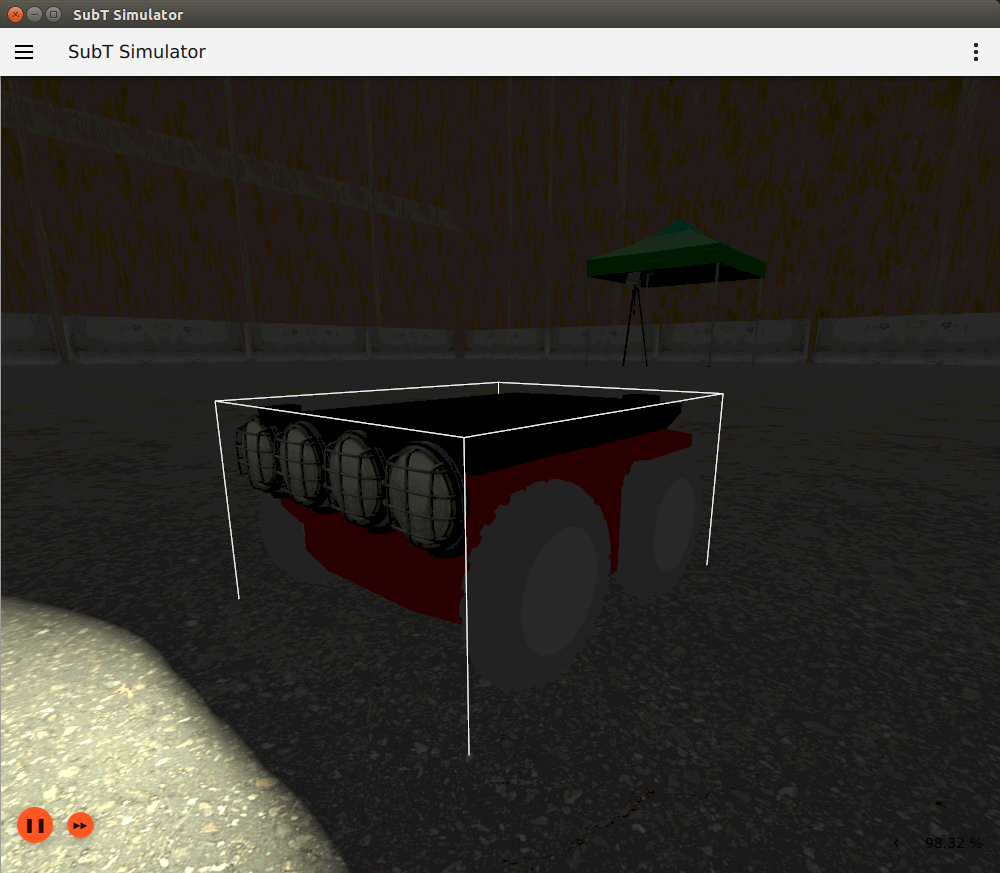

- sophisticated_engineering_x4_sensor_config_1

- ssci_x2_sensor_config_1

- ssci_x4_sensor_config_1

1.背景

在这篇博客中,我们简单介绍了Subt的使用。这篇博客则侧重介绍一下Subt Github项目中自带的一些机器人模型,以方便参考和后续使用。

2.机器人模型

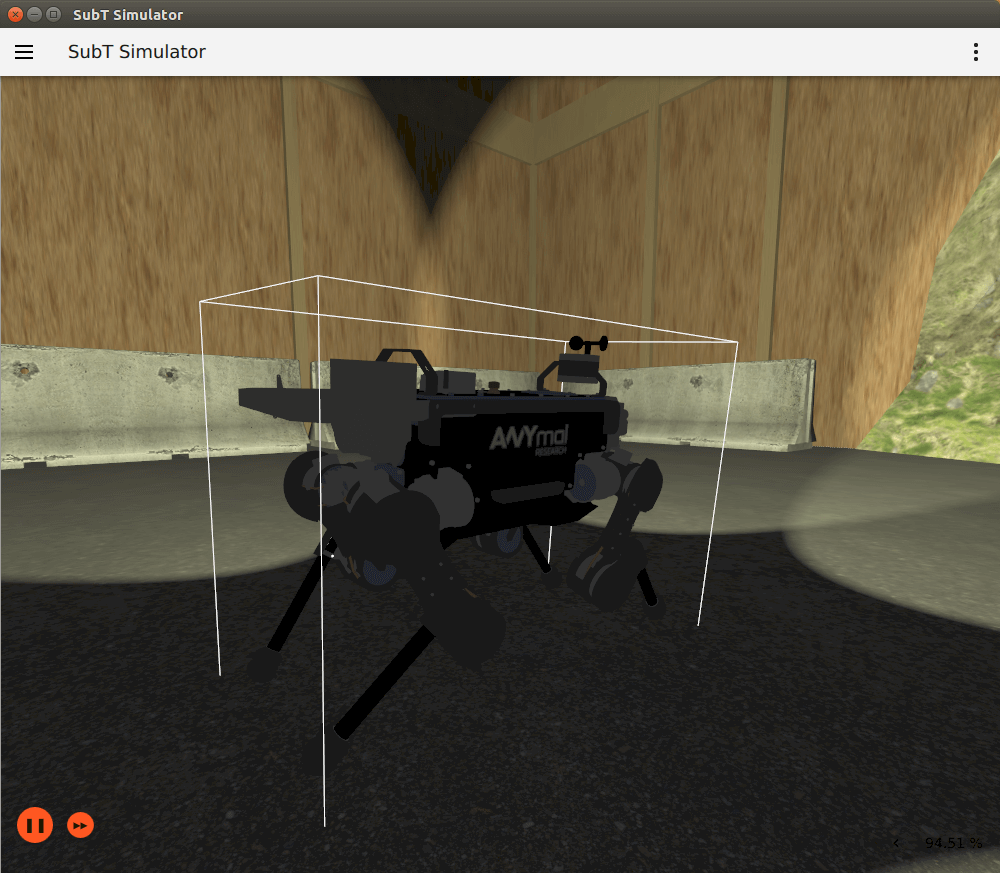

cerberus_anymal_b_sensor_config_1

传感器

- IMU - Xsens MTi 100, modeled by

imuplugin - LIDAR - Velodyne VLP-16, modeled by

gpu_lidarplugin - LIDAR - Robosense RS-Bpearl, there is not (yet) an Ignition-Gazebo plugin

- Depth Camera - Intel Realsense D435, modeled by

rgbd_cameraplugin - Color Camera - FLIR Blackfly S Model ##BFS-U3-16S2C-CS , modeled by

cameraplugin - Synchronization Board - Autonomous Systems Lab, ETH Zurich - VersaVIS, there is not (yet) an Ignition-Gazebo plugin

- 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

This ANYmal is controlled by the custom cerberus_anymal_b_control_1 package, available in the repository cerberus_anymal_locomotion.

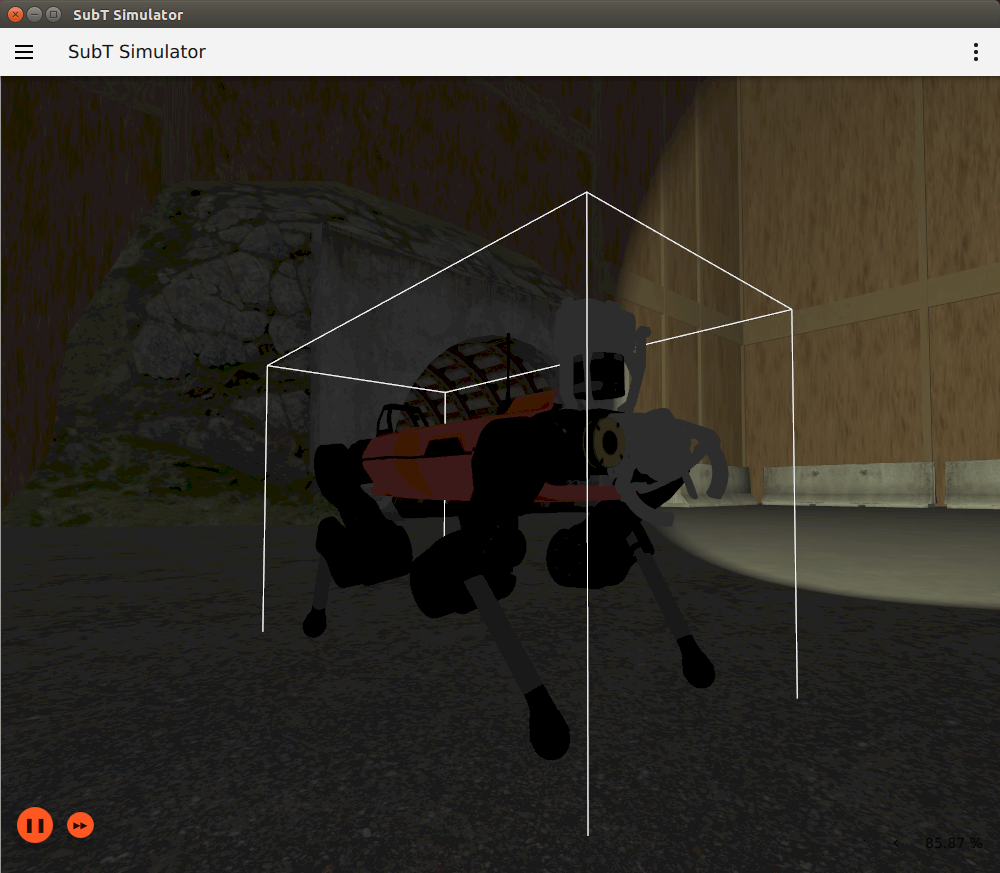

cerberus_anymal_c_sensor_config_1

传感器

- Onboard IMU - N/A, modeled by

imuplugin - LIDAR - Velodyne VLP-16, modeled by

gpu_lidarplugin - LIDAR - Robosense RS-Bpearl, modeled by

gpu_lidarplugin - VIO Perception Head - Alphasense, modeled by

imuandcameraplugin - 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

This ANYmal is controlled by the custom cerberus_anymal_c_control_1 package, available in the repository cerberus_anymal_locomotion.

cerberus_gagarin_sensor_config_1

传感器

- IMU - Generic, modeled by

imuplugin - LIDAR - Ouster OS1-16, modeled by

gpu_rayplugin - Color Camera - FLIR Blackfly S, modeled by

cameraplugin - Depth Sensor - Picoflexx, modeled by

depth_cameraplugin

控制

This Aerial Scout is controlled by the default twist controller package inside the simulation environment.

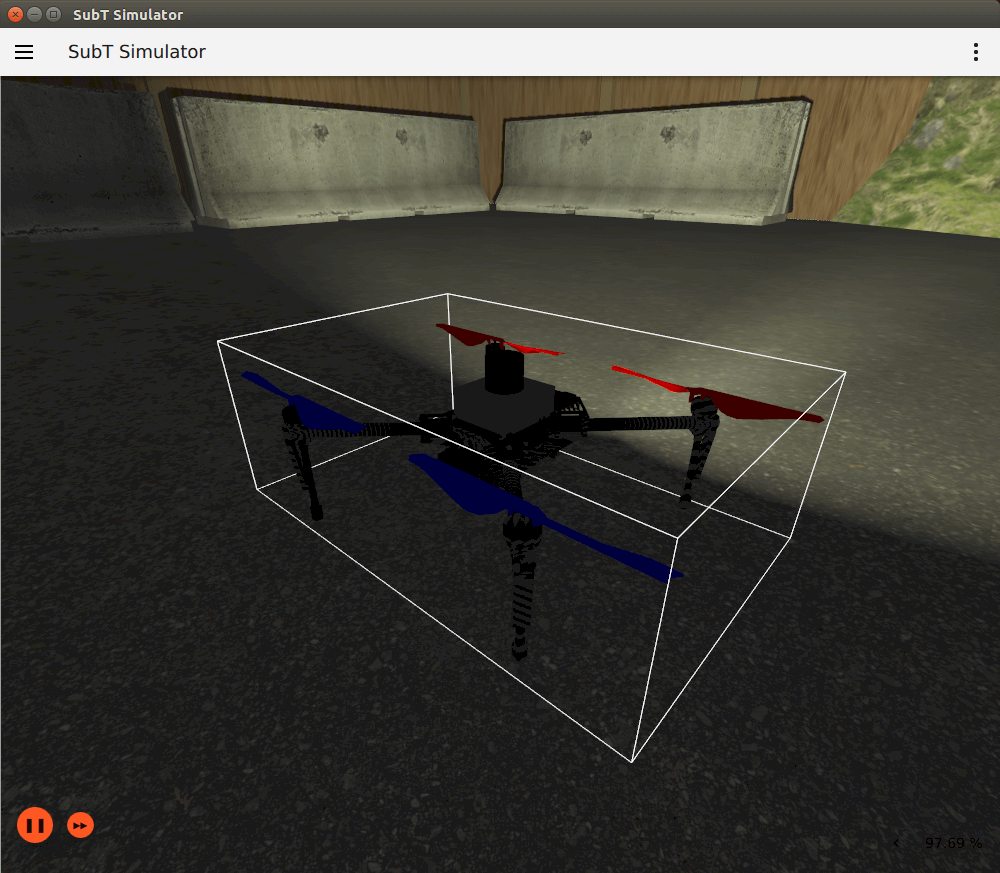

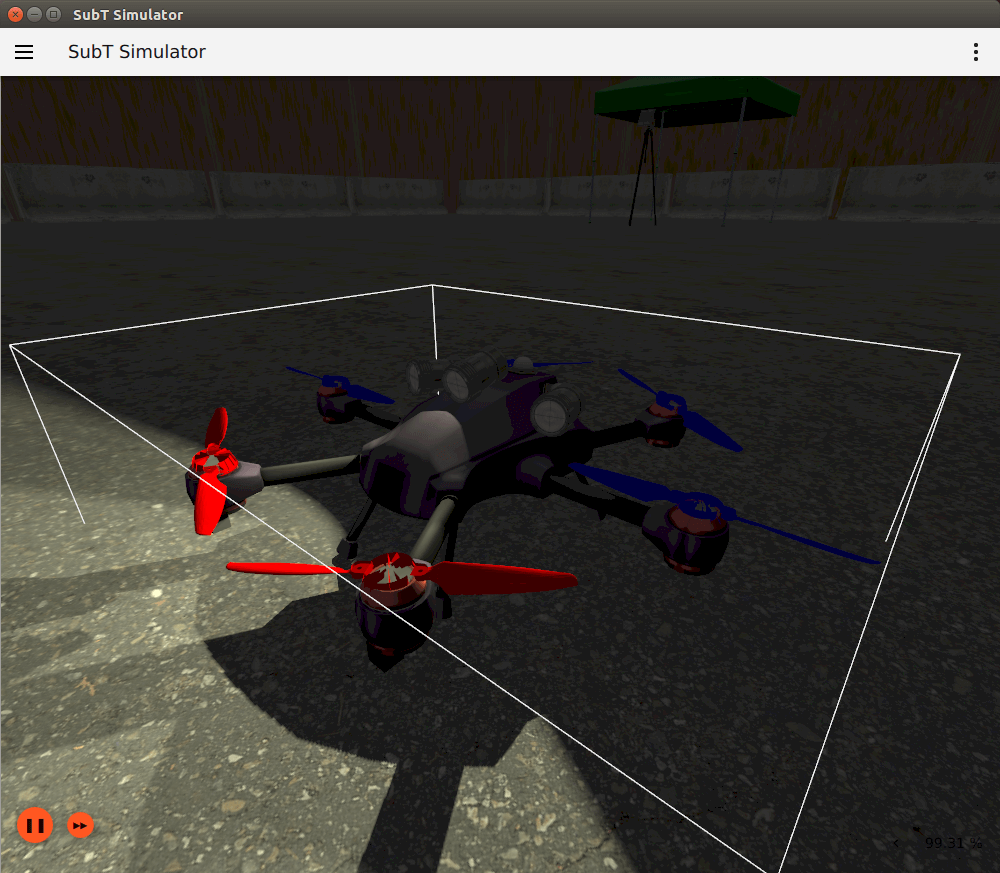

cerberus_m100_sensor_config_1

传感器

- IMU - Vector Nav VN100, modeled by

imuplugin - LIDAR - Velodyne VLP-16, modeled by

gpu_rayplugin - Color Camera - FLIR Blackfly S, modeled by

cameraplugin

控制

This Aerial Scout is controlled by the default twist controller package inside the simulation environment.

cerberus_rmf_sensor_config_1

传感器

- IMU - Generic, modeled by

imuplugin - 4x ToF Ranging sensor - VL53L0X, modeled by

gpu_rayplugin - Tracking Camera - T265, modeled by two

camerasensors (right and left) with large FoV.

控制

This Aerial Scout is controlled by the default twist controller package inside the simulation environment.

costar_husky_sensor_config_1

传感器

CoSTAR’s Husky UGV model with an RGBD camera, a medium range lidar, cliff sensor, and IMU

控制

It is controlled by the default twist controller package inside the simulation environment.

costar_shafter_sensor_config_1

传感器

- 1x IMU - Cube Module, modeled by

imuplugin - 1x 3D LIDAR - Velodyne VLP-16, modeled by

gpu_rayplugin - 1x RGBD Camera - Realsense ZR300, modeled by

rgbd_cameraplugin

控制

This COSTAR Shafter platform is controlled by the default twist controller package inside the simulation environment.

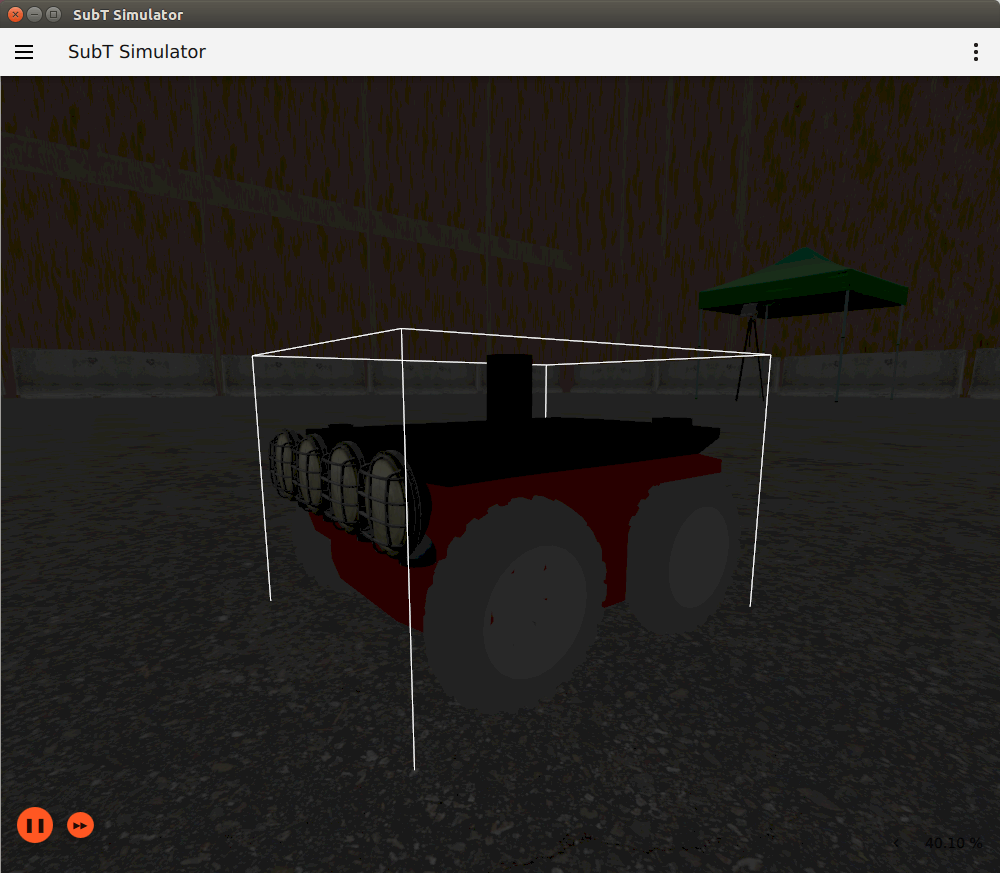

csiro_data61_dtr_sensor_config_1

传感器

- A Velodyne VLP-16 Lidar, modeled by the gpu_lidar plugin. (note this lidar is also mounted at 45 degrees on a rotating gimbal to give a near-360 degree FOV)

- ECON e-CAM130_CUXVR Quad Camera system with each camera mounted on one side of the payload. The version on the platform is a custom set of sensors run at a resolution of 2016x1512, hardware triggered at 15fps. They are modeled by the standard camera plugin

- A Microstrain CV5-25 IMU, modeled by the standard imu plugin

控制

The platform is controlled by the standard diff-drive plugin for ignition gazebo, with twist inputs on “/

csiro_data61_ozbot_atr_sensor_config_1

传感器

- A Velodyne VLP-16 Lidar, modeled by the gpu_lidar plugin. (note this lidar is also mounted at 45 degrees on a rotating gimbal for to give a near-360 degree FOV)

- ECON e-CAM130_CUXVR Quad Camera system with each camera mounted on one side of the payload. The version on the platform is a custom set of sensors run at a resolution of 2016x1512, hardware triggered at 15fps. They are modeled by the standard camera plugin

- A Microstrain CV5-25 IMU, modeled by the standard imu plugin

- 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

The platform is controlled by the standard diff-drive plugin for ignition gazebo, with twist inputs on “/

ctu_cras_norlab_absolem_sensor_config_1

传感器

- Pointgrey Ladybug LB-3 omnidirectional camera: modeled by 6

cameraplugins. The physical “multicamera” also has six distinct cameras, and the simulated ones closely follow their placement. Each camera has resolution1616x1232 pxwith a field of view of78 deg. The camera captures 6 synchronized “multiimages” per second. - D435 RGBD Camera, modeled by

rgbd_cameraplugin- 1x fixed, downward-facing at about 30 degrees (for examining terrain)

- Sick LMS-151 2D lidar, modeled by

gpu_lidarplugin - XSens MTI-G 710 IMU: modeled by

imu_sensorplugin. - 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

This robot is controlled by the DiffDrive plugin. It accepts twist inputs which drives the vehicle along the x-direction and around the z-axis. We add additional 8 pseudo-wheels where the robot’s tracks are to better approximate a track vehicle (flippers are subdivided to 5 pseudo-wheels). Currently, we are not aware of a track-vehicle plugin for ignition-gazebo. A TrackedVehicle plugin does exist in gazebo8+, but it is not straightforward to port to ignition-gazebo. We hope to work with other SubT teams and possibly experts among the ignition-gazebo developers to address this in the future.

Flippers provide a velocity control interface, but a positional controller and a higher-level control policy are strongly suggested.

explorer_ds1_sensor_config_1

传感器

- 3x RGBD camera — intel realsense depth d435i, modeled by

rgbd_cameraplugin. - 1x 3D medium range lidar — Velodyne-16, modeled by

gpu_lidarplugin. - 1x IMU f — Xsense MTI-100, modeled by

imuplugins.

控制

DS1 is controlled by the open-source teleop_twist_joy package.

explorer_r2_sensor_config_1

传感器

- 4x RGBD camera — intel realsense depth d435i, modeled by

rgbd_cameraplugin. - 1x 3D medium range lidar — Velodyne-16, modeled by

gpu_lidarplugin. - 1x IMU — Xsense MTI-100, modeled by

imuplugins. - 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

R2 is controlled by the open-source teleop_twist_joy package.

explorer_r3_sensor_config_1

传感器

- 4x RGBD camera — intel realsense depth d435i, modeled by

rgbd_cameraplugin. - 1x 3D medium range lidar — Velodyne-16, modeled by

gpu_lidarplugin. - 1x IMU — Xsense MTI-100, modeled by

imuplugins. - 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

R3 is controlled by the open-source teleop_twist_joy package.

explorer_x1_sensor_config_1

传感器

- 4x RGBD camera

控制

It is controlled by the open-source teleop_twist_joy package.

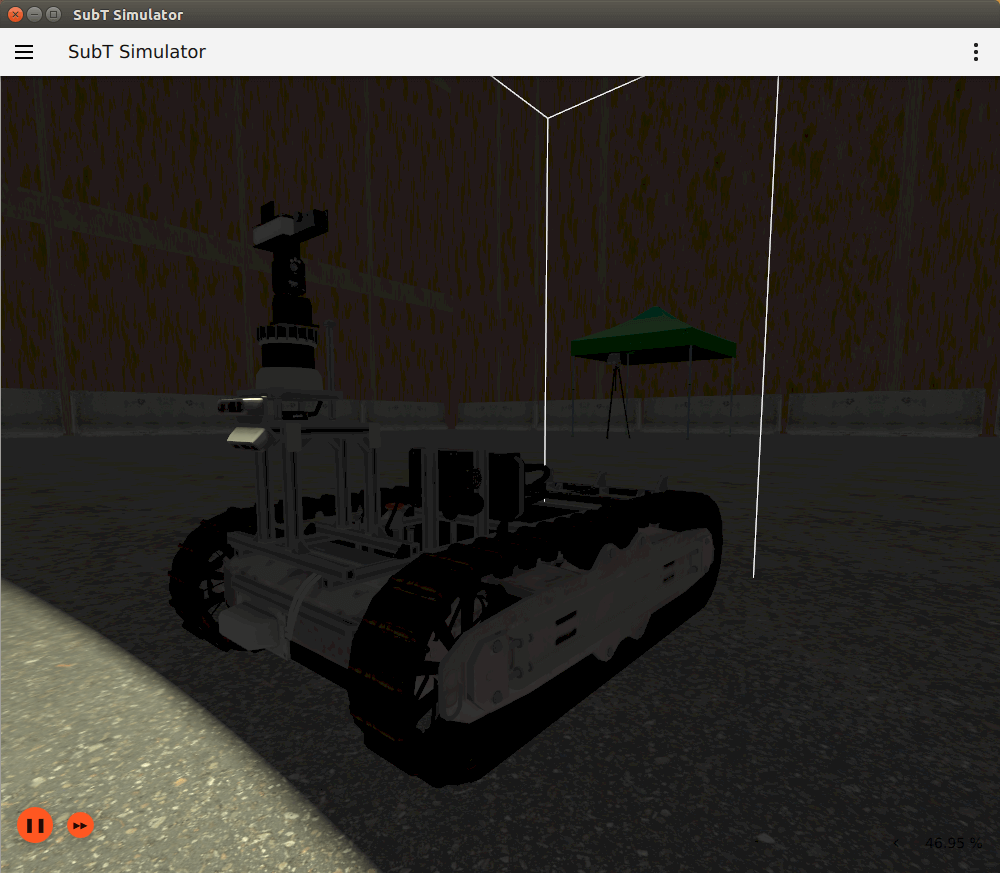

marble_hd2_sensor_config_1

传感器

- Trossen ScorpionX MX-64 Robot Turret, modeled by JointStateController and JointStatePublisher plugins.

- D435i RGBD Camera (x3), modeled by rgbd_camera plugin

- 1x fixed, forward-looking

- 1x fixed, downward-facing at about 45 degrees (for examining terrain)

- 1x gimballed (on the Trossen turret)

- Ouster 3D Lidar (64 Channel), modeled by gpu_lidar plugin

- Microstrain IMU: 3DM-GX5-25, modeled by imu_sensor plugin. Notes on modeling of the IMU are included in the model.sdf file. (located under the 3D lidar, installed at same x,y location as 3D lidar)

- RPLidar S1 Planar Lidar (under the 3D lidar), modeled by gpu_ray plugin

- Vividia HTI-301 LWIR Camera (not modeled because thermal camera not yet supported in simulator) - located on the turret next to the D435i and light.

- 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

This MARBLE HD2 is controlled by the DiffDrive plugin. It accepts twist inputs which drive the vehicle along the x-direction and around the z-axis. We add additional pseudo-wheels where the HD2’s treads are to better approximate a track vehicle. Currently, we are not aware of a track-vehicle plugin for ignition-gazebo. A TrackedVehicle plugin does exist in gazebo8+, but it is not straightforward to port to ignition-gazebo. We hope to work with other SubT teams and possibly experts among the ignition-gazebo developers to address this in the future.

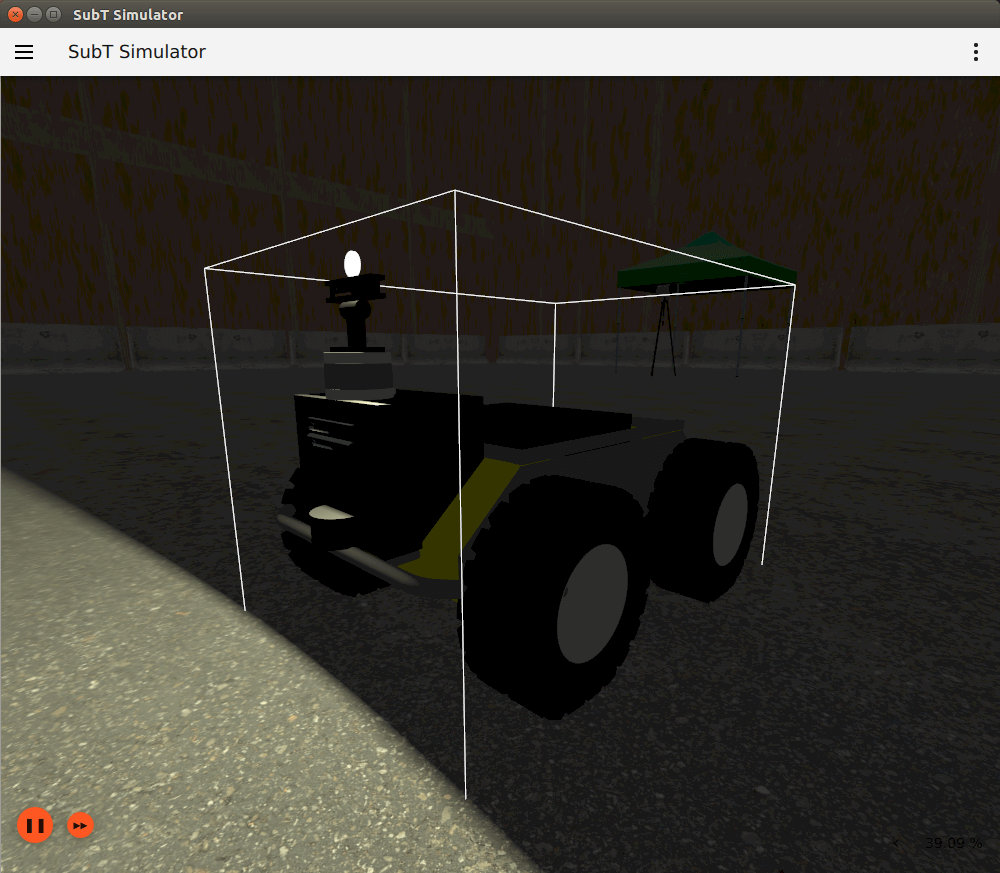

marble_husky_sensor_config_1

传感器

- Trossen ScorpionX MX-64 Robot Turret, modeled by JointStateController and JointStatePublisher plugins.

- D435i RGBD Camera (x3), modeled by rgbd_camera plugin

- 1x fixed, forward-looking

- 1x fixed, downward-facing at about 45 degrees (for examining terrain)

- 1x gimballed (on the Trossen turret)

- Ouster 3D Lidar (64 Channel), modeled by gpu_lidar plugin

- Microstrain IMU: 3DM-GX5-25, modeled by imu_sensor plugin. Notes on modeling of the IMU are included in the model.sdf file. (located under the 3D lidar, installed at same x,y location as 3D lidar)

- RPLidar S1 Planar Lidar (under the 3D lidar), modeled by gpu_ray plugin

- Vividia HTI-301 LWIR Camera (not modeled because thermal camera not yet supported in simulator) - located on the turret next to the D435i and light.

- 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

This MARBLE Husky is controlled by the DiffDrive plugin. It accepts twist inputs which drive the vehicle along the x-direction and around the z-axis.

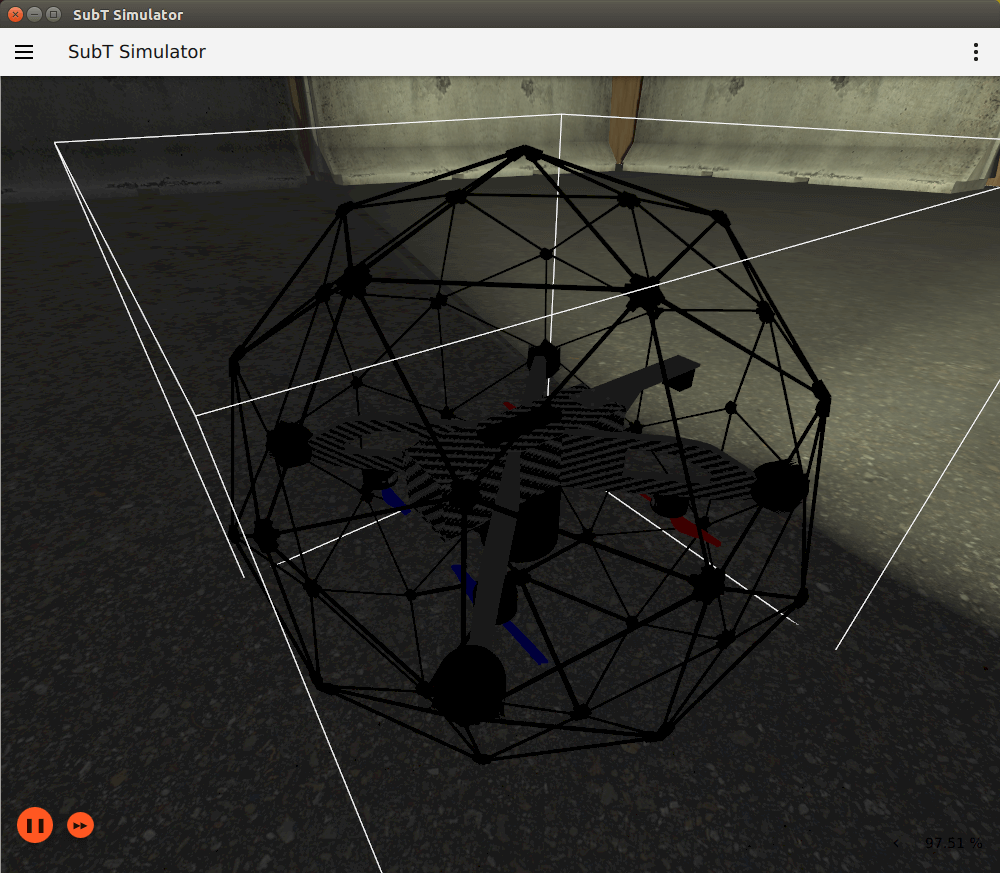

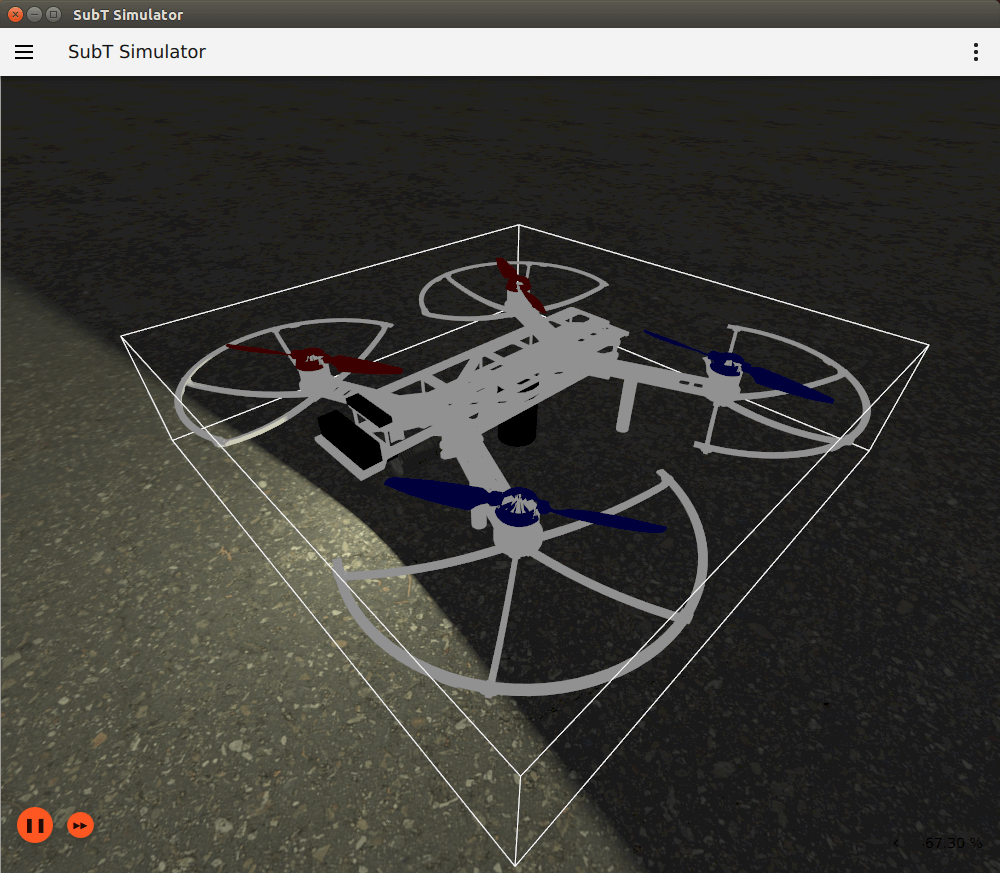

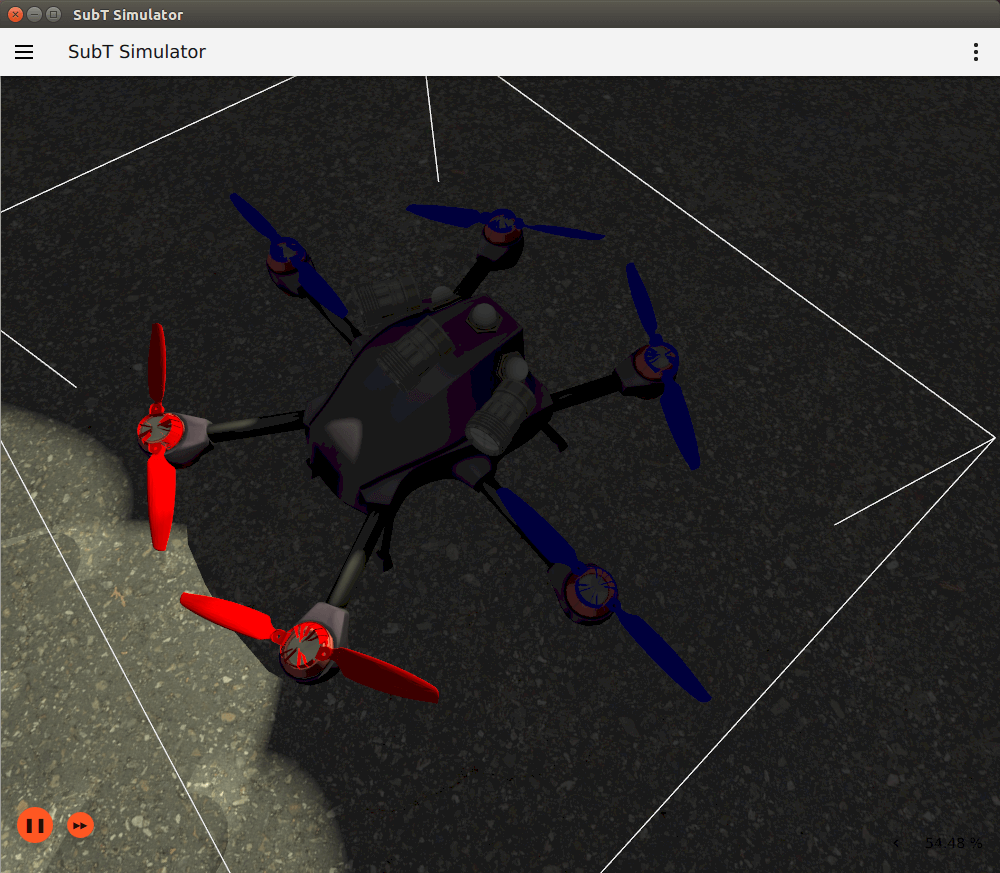

marble_qav500_sensor_config_1

传感器

- D435i RGBD Camera, modeled by rgbd_camera plugin

- 1x fixed, forward-looking

- Ouster 3D Lidar (64 Channel), modeled by gpu_lidar plugin

- Microstrain IMU: 3DM-GX5-15, modeled by imu_sensor plugin. Notes on modeling of the IMU are included in the model.sdf file.

- Picoflexx TOF Cameras, modeled by depth_camera plugin

- 1 fixed on the front top

- 1 fixed on the front bottom

控制

This vehicle is controlled by the Twist ROS topic cmd_vel

robotika_freyja_sensor_config_1

传感器

- 2x RGBD camera — Mynt Eye D, modeled by

rgbd_cameraplugin. - 2x Planar lidar — SICK TIM881P, modeled by

gpu_lidarplugin. - 4x Monocular camera — KAYETON, modeled by

cameraplugin. - Tilt-compensated compass — CMPS14, modeled by

imuandmagnetometerplugins. - Altimeter — Infineon DPS310, modeled by

air_pressureplugin. - Wheel odometry - wheel encoders modelled by

pose-publisherplugin. - Gas sensor — Winsen MH-Z19B, modeled by GasEmitterDetector plugin.

- 6 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

Freyja is controlled by the open-source Osgar framework or by custom Erro framework.

robotika_kloubak_sensor_config_1

传感器

- 2x RGBD camera — intel realsense depth d435i, modeled by

rgbd_cameraplugin. - 2x Planar lidar — SICK TIM881P, modeled by

gpu_lidarplugin. - 2x Monocular camera — Arecont Vision AV3216DN + lens MPL 1.55, modeled by

cameraplugin. - 2x IMU f — LORD Sensing 3DM-GX5, modeled by

imuplugins. - 2x Magnetometers — LORD Sensing 3DM-GX5, modeled by

magnetometerplugins. - Altimeter — Infineon DPS310, modeled by

air_pressureplugin. - Wheel odometry - wheel encoders modelled by

pose-publisherplugin. - 12 communication breadcrumbs are also available as a payload for this robot in sensor configuration 2.

控制

Kloubak is controlled by the open-source Osgar framework. The robot Kloubak consists of the front and rear differential drives connected by joint. The maximum joint angle is limited by robot construction. The limit value is +- 70 deg.

robotika_x2_sensor_config_1

传感器

The X2 robot configuration with RealSense D435i RGBD camera (640x360, 69deg-FoV), 30m 270-deg planar lidar, IMU and Gas detector

控制

This vehicle is controlled by the Twist ROS topic cmd_vel

sophisticated_engineering_x2_sensor_config_1

传感器

This X2 with sensor configuration 1 includes the same sensors as the X2_SENSOR_CONFIG_5. Only the cameras have a wider cone angle.

控制

This X2 Config 1 can be controlled in the same way as the X2_SENSOR_CONFIG_5.

sophisticated_engineering_x4_sensor_config_1

传感器

This X4 with sensor configuration 1 includes the same sensors as the X4_SENSOR_CONFIG_5. Only the positions of the cameras have been changed.

控制

This X4 Config 1 can be controlled in the same way as the X4_SENSOR_CONFIG_5.

ssci_x2_sensor_config_1

传感器

An X2 ground robot with the following sensors:

- 2D lidar (based on RPLidar S1, 360 degrees)

- 2 VGA RGBD cameras: one facing up about 20 deg and one facing down 20 deg.

- An upward facing point lidar for measuring height to ceiling.

控制

This vehicle is controlled by the Twist ROS topic cmd_vel

ssci_x4_sensor_config_1

传感器

X4 UAV with sensor configuration #8: 3D medium range lidar, IMU, pressure sensor, magnetometer, up and down Lidar Lite V3. Originally submitted as ssci_x4_sensor_config_8.

控制

This vehicle is controlled by the Twist ROS topic cmd_vel

本文作者原创,未经许可不得转载,谢谢配合